(mood.wav)

Overview

Music is one of my passions, and I love using Spotify’s “Your Daily Mixes” feature to get my day going. It’s incredible that Spotify is able to bucket songs together by genre, and overall it’s a very satisfying product to use. However, in some cases, the way these mixes are structured can lead toward a negative user experience.

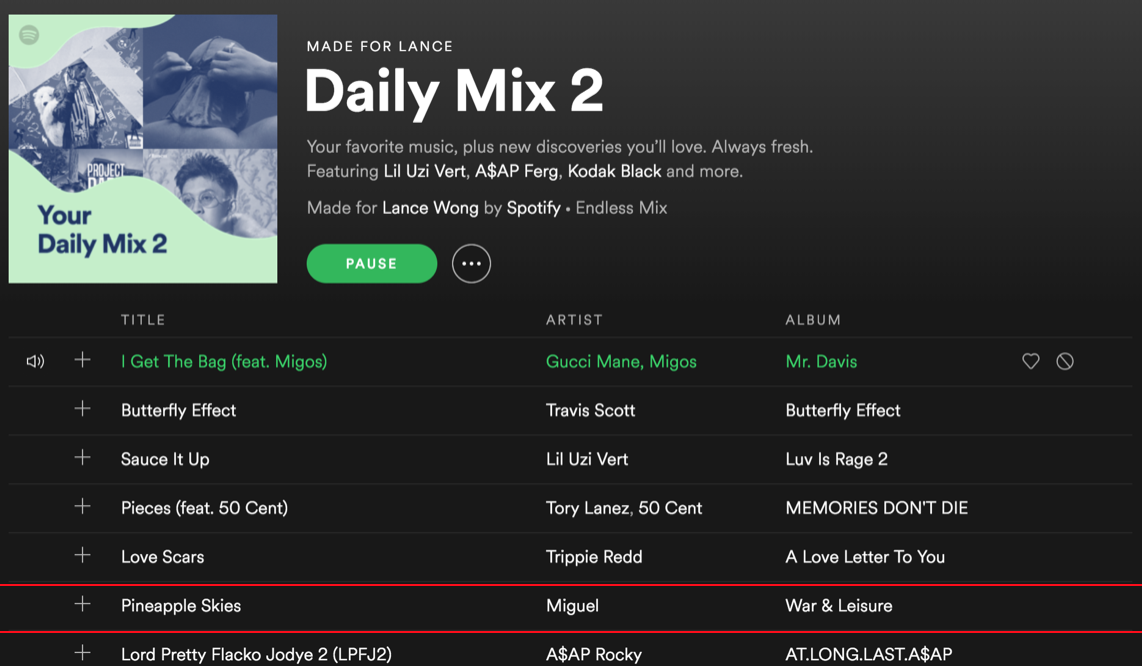

Take this playlist for example. Great song selection of rap and trap songs, and I appreciate the artist diversity. I would probably use this playlist for something high energy, like going out for a run or throwing a party. But when “Pineapple Skies” by Miguel appears (a slower, poppy, RnB-type song that sounds more like Pharell’s “Happy” than Gucci Mane’s “I Get The Bag”) in my playlist, there is a change in flow, especially when the next song in the list is LPFJ2. See below for a little snippet:

In attempt to alleviate this sudden change in flow, I propose to develop an application that analyzes song lyrics, groups similar songs together, and creates a mix that is more like a DJ set than your average playlist.

Obtaining data

I first pulled ~44,000 songs off of Spotify curated Hip-Hop/Rap/RnB playlists. From there, I used the song list to perform two tasks:

- Pull audio features from the Spotify API

- Find and scrape song lyrics using BeautifulSoup and the Genius API.

Although there are so many sites that post lyrics, scraping for them can be a difficult task. If you’re interested in collecting lyrics in general, I recommend taking a look at pylyrics3 as well as lyrics scraper by stanleycyang. I ended up making a custom scraper based off of stanleycyang’s for my dataset.

Model Development

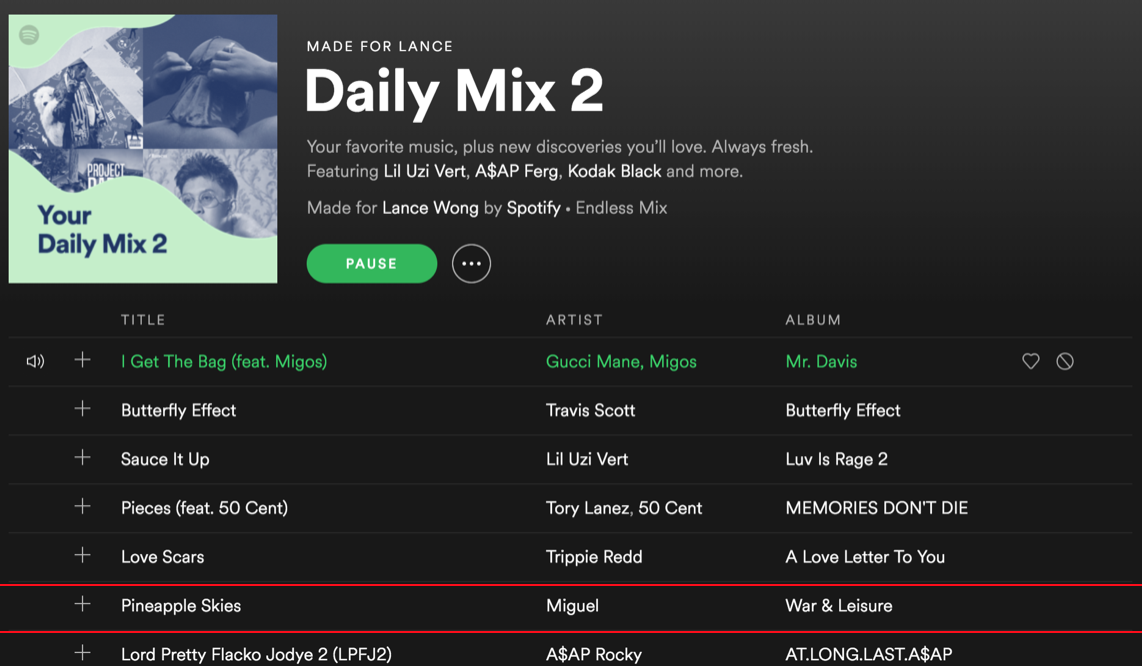

After cleaning my corpus through stopwords and lemmatization, I trained a doc2vec model with my corpus to find similarities between songs. doc2vec is an adaptation of word2vec, but in addition to finding similarities between words (assuming we’re using PV-DM), doc2vec has the ability to find similarities between sentences and labels. In this case, we are talking about song lines and songs. The end result is a 150-dimensional vector space for each song in the dataset. Based off these vectors, my doc2vec model was able to create some interesting comparisions:

“Thinkin bout you” by Frank Ocean, it seems, is fairly similar to “Bad Blood” by NAO (Both artists are extremely talented and I highly recommend you check them out if you haven’t before!). Looking through the lyrics, you can see a sense of longing and wistfulness. The only difference between the two is that Frank is talking about an ex-lover, while NAO is speaking about a friend she had a falling out with.

Developing Clusters

With the 150-dimensional space obtained from the doc2vec model, I wanted to cluster the songs into groups. First, I used Non-negative Matrix Factorization to reduce my dimensional space into 20 vectors. Then, using a silhouette score, I tried to estimate the proper amount of clusters to have for proper resolution:

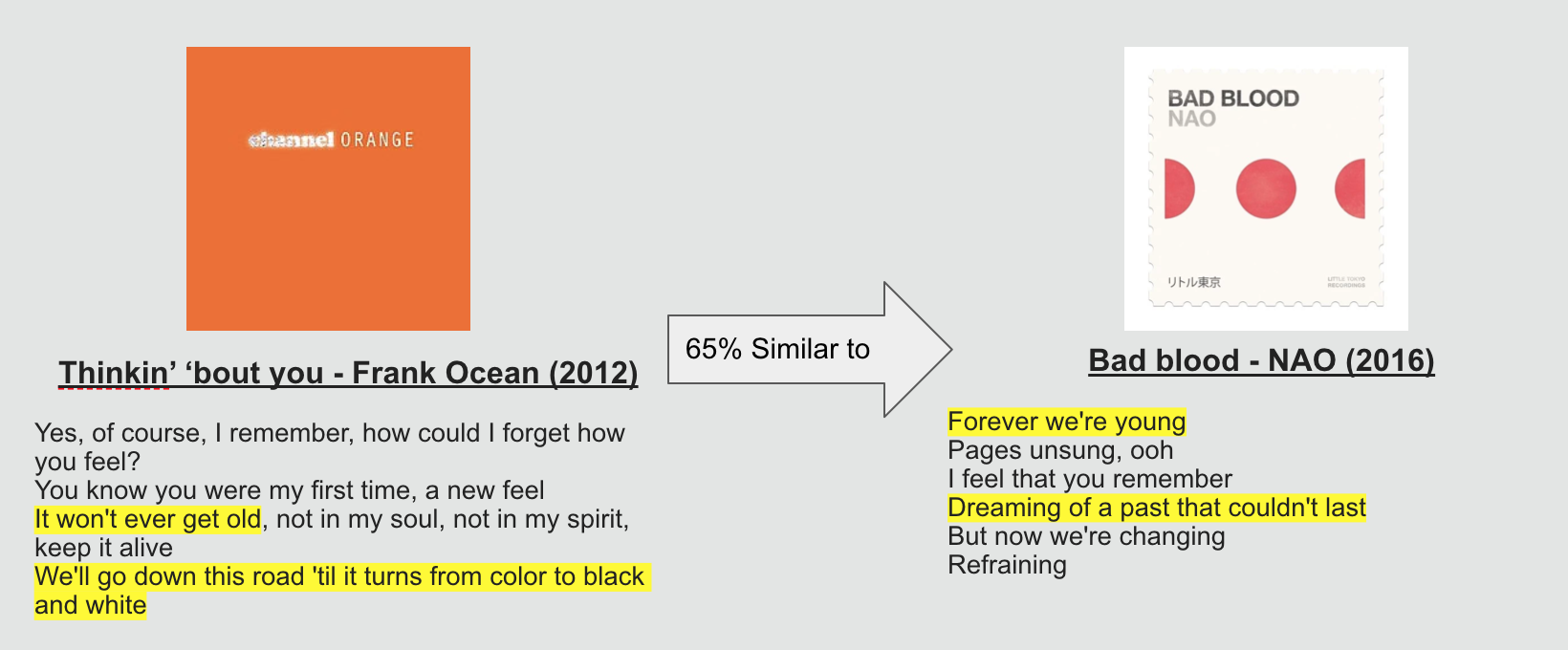

My dataset’s Silhouette score peaks at 2 clusters and decreases as cluster number increases, but sees a spike at 5 clusters before dropping significantly. I then found songs with closest to the K means centroid to hand label clusters. Let’s see how the clusters look like through a t-SNE visualization.

With the exception of “Found Love” and “Lost Love”, the clusters seem to be quite resolved. For the two love categories, I posit that “I hate that I love you” and “I love you too much to hate you” could be easily confused by the model (Love is, after all, the hardest emotion to understand).

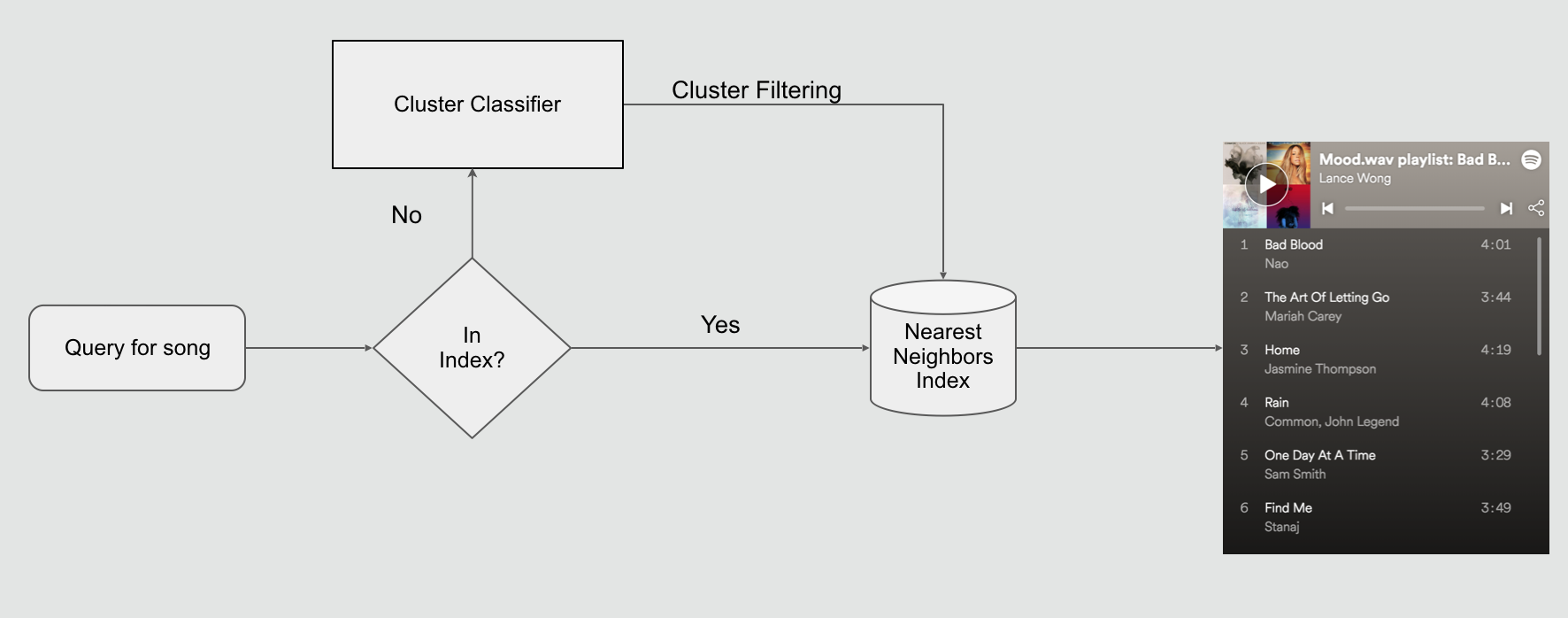

What if a song doesn’t exist in my dataset?

44,000 songs is by no means an exhaustive list of every hip-hop, RNB, or rap song in the known universe, and since I can’t necessarily tell the model to quickly find the lyrics of a given song, I would need to find a proxy of where the song would exist. Using the clusters from K-means as labels, I trained and validated a Random Forest Classifier model with a song’s audio features to see if I could tie a song’s structure to its lyrical content label. At its best, my model could accurately a song’s label 48.8% of the time. However, if I expanded to the top two labels, my model would be correct 82.5% of the time.

Putting it all together

After establishing the clusters as well as the classifier, I moved on to application development. For my application to work, I used ANNOY, a nearest neighbors algorithm that finds a set amount of songs close to it based off of audio features and cluster label.

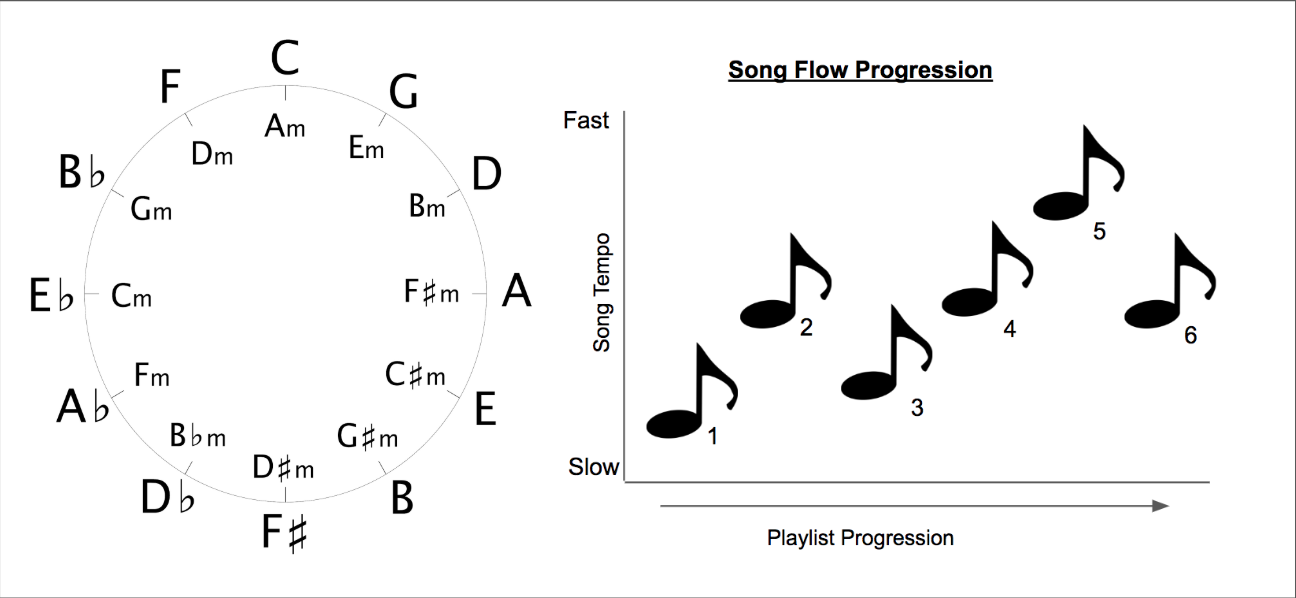

From there, I created a modified version of the traveling salesman problem algorithm and used relative Key and BPM as “coordinates” for the shortest distance. Using relative Key and BPM are advantageous for this problem because they most resemble “Circle of 5ths”, a popular technique DJs use to create mixes.

See it in action!

The video above shows a quick demonstration of my application in action. You can see that the first song flows well with the second song. I can now listen to similar songs with similar flows, which makes for a better user experience.

For this project, I was able to use various techniques that I picked up over my time here at Metis and package them into a singular application that I am passionate about.